The Combinatorics of Composition

Motivation

Suppose you are an electronic engineer, and you want to connect two integrated circuits. These are doodads that look something like:

You can think of an integrated circuit as a spider which senses things through its legs. To make two integrated circuits talk to each other, you connect their legs. Then you can think of the two integrated circuits that are connected to each other as a single integrated circuit that can then communicate with other integrated circuits. In fact, inside the black spidery body of the integrated circuit there are many many subcircuits all connected to each other.

Anyways, the point is that we build electronic circuits by joining together smaller electronic circuits. This is uncontroversial.

What is slightly more controversial is that if we want a reasonable theory of electronic circuits, this compositional behavior must be taken into account. In other words, we want to derive the behavior of an electronic circuit from the behavior of its parts and the interactions between those parts.

If you have learned about electronic circuits in a physics class, then you are probably used to thinking about an electronic circuit as a collection of resistors, inductors, and capacitors wired together. But in the real world, electronic circuits are big complicated beasts, and if you try to analyze them by thinking of the entire circuit as a big network of resistors, inductors, and capacitors, you will have a bad time. In other words, for practical reasons we want to be able to analyze parts of a circuit separately, and then put them together afterwards.

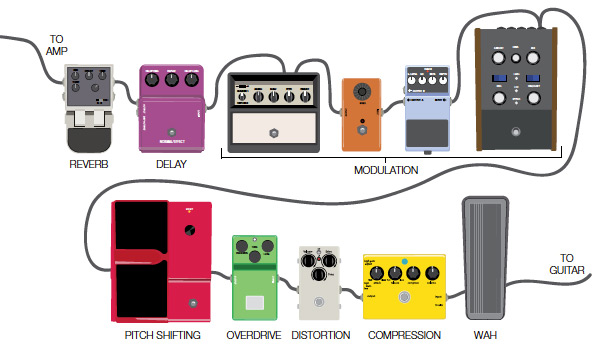

For instance, consider a chain of guitar effects pedals. Each effect is composed of many tiny circuits all working together. But the effect as a whole can be understood as, for instance, cutting out high frequencies from the signal, or just amplifying the signal (which seems simple, but is actually somewhat hard to do without distortion, and requires a more complex design than you would think). Complex circuits can have simple behavior, and so it is useful to derive that simple behavior and then forget about the internal details.

Guitar effects pedals are somewhat special, however, because on a guitar effects pedal there is a clearly marked “input” port and a clearly marked “output” port. Given two guitar effects pedals, there is an obvious way to compose them; connect the input port of one to the output port of the other. All you have to choose is which effect comes first.

However, if I were to give you two arbitrary electronic circuits and say “compose these”, you wouldn’t have the slightest idea how to do so. There are a lot of different ways to compose two circuits.

In this post, we are going to discuss a mathematical construction called a “cospan” (pronounced koh-span) that solves this problem. Cospans allow the data of “input” ports and “output” ports to be attached to an electronic circuit, and at the same time give a good account of how to fuse two electronic circuits together. In other words, cospans allow us to go from messy circuits that electronic engineers struggle with to plug and play effects pedals that musicians use without thinking about it.

Graph Composition

The fundamental difficulty in composing electronic circuits comes not from the electronics, but from the shape. Therefore, before we tackle the problems of resistors, inductors, and capacitors, we are first just going to talk about composing graphs.

Recall that a graph G is comprised of a set of nodes N, a set of edges E, a function s : E \to N which assigns a source to each edge, and a function t : E \to N which assigns a target to each edge. In this blog post, we allow multiple edges between nodes, and we also allow edges from a node to itself. A graph homomorphism f between G_1 = (N_1,E_1,s_1,t_1) and G_2 = (N_2,E_2,s_2,t_2) is a tuple of two functions f_N : N_1 \to N_2 and f_E : E_1 \to E_2 such that the following diagram commutes:

Now, to compose two graphs, one simple solution would be two just join two vertices with an edge. Then the data required to specify inputs and outputs could just be restricted to a choice of input node and a choice of output node.

But we want a finer notion of composability. Generally, electronic circuits need to be composed along multiple wires; for example a USB port has four wires (if you are reading this on a device with a USB port, you can look and verify this!) To get this finer notion, we will compose by gluing together subgraphs. By this I mean that we designate part of the graph to be the “input” and part of the graph to be the “output”. Then we compose the two graphs by gluing together the output subgraph of one graph to the input subgraph of another graph, as in the following picture.

This is fairly intuitive; the question is how do we model this formally? One way would be to just say that the graph is equipped with two subgraphs for input and output. There are a couple problems with this, however. First of all, even if the input subgraph of one graph is isomorphic to the output subgraph of another, there may be many choices of this isomorphism. The concrete instantiation of this is that we need some way of remembering which wire is which in a USB cable. The other problem is that in category theory it is bad style to talk about the “inside” of an object. Instead, it is better to talk about how the object relates to other objects. This is more of a problem of aesthetics, however it turns out that by following our nose and having good aesthetics, we can fix the first problem as well.

The solution is that we denote the input with a map i : X \to G which is a monomorphism (injective on nodes and edges). For instance, in the diagram above, the subgraph in green is X, and the map i is the inclusion map. Similarly, we also have a monomorphism o : Y \to G that denotes the output (which is red in the figure). So the formal definition of a graph with “inputs and outputs” is a diagram that looks like this

We call this a “cospan”, and we call X and Y the “feet” of the cospan. Now the problem of choosing an identification between input and output goes away: we can only compose cospans that share a common foot.

How can we use this to glue together the graphs? Let’s first simplify the question to the case where G,H,X,Y,Z are all just sets. The setup is two sets G and H, along with monomorphisms to G and H from Y (injections).

We then form the disjoint union of G and H. This looks like the following:

The problem with G \sqcup H is that each point in Y gets sent to two different points in G \sqcup H. We want to make these points the same point. The technical term for this (I kid you not) is gluing together the images of Y. The result of this is:

We call G \sqcup_Y H the pushout. From now on, I’m going to move back to an abstract notation, because it’s pretty time consuming to draw those big diagrams, and you should practice thinking about that kind of diagram when you see a diagram like this:

The important thing to note is that you can follow the arrows from Y and whichever way you go you will end up in the same place.

The pushout satisfies a universal property. Namely if there is some set K and maps j_G’ : G \to K and j_H’ : H \to K such that the same thing happens (whichever way you go from Y you end up in the same place), then there exists a unique map from G \sqcup_Y H to K such that any way you go in the following diagram you end up in the same place.

With this more abstract definition, it is possible to generalize pushouts from sets to graphs. Namely, the pushout G \sqcup_Y H is the object in the category of graphs that satisfies the above universal property. The intuition for how this works should just be “gluing together along Y”, but now you know the formal definition as well. You should work out a concrete definition for the pushout in the category of graphs that is akin to the concrete definition I gave in the category of sets.

Anyways, we can use this to compose two cospans. First we take the pushout with respect to the “foot” that the cospans have in common (in this case, Y), and then we compose the pushout maps with the maps from the “outer feet” (in this case X and Z) to get our new input and output maps, j_G \circ i_G and j_H \circ o_H.

The Bigger Picture

So far, I’ve just been talking about composing two specific graphs. However, cospans allow us to do much more than that. First of all, we can create a category in which the objects are graphs, and a morphism between G and H is a cospan with G and H as its feet. Then composition works as above. Identity morphisms are what you would expect:

and it is easy to check that this is the identity for the above composition.

However, there is a slight problem, which is that pushouts are only defined up to isomorphism. To get around this, we want to say that really the morphisms are isomorphism classes of cospans. However, I haven’t given you a good definition for morphism between cospans. It turns out that there is a natural way of defining this: a morphism between X \to G \leftarrow Y and X \to G’ \leftarrow Y is a commutative diagram of the form

This points to a deeper structure on the category of cospans: it is in fact a 2-category, which is a category with 2-morphisms between its morphisms. The 2-category that you might be most familiar with is the category of categories. This has functors as morphisms and natural transformations as 2-morphisms. However, for now we are just going to discuss the category that has isomorphism classes of cospans as its morphisms.

You’ve probably noticed at this point that we’ve moved pretty far away from graphs; the only thing that we have used about the category of graphs is that it has pushouts. It turns out cospans are useful for much, much more than just composing graphs. Here is an example. Consider the category of cospans from the category of real vector spaces and linear maps. If the “meaning” of a cospan on the category of graphs was a graph that was annotated with an input and an output, then what is the “meaning” of a cospan on the category of real vector spaces?

I would like to convince you that the “meaning” of a cospan on the category of real vector spaces is a “linear relation”. Consider a cospan f : V \to A \leftarrow W : g. We can use this to define a relation R_A:

v \mathrel{R_{A}} w \quad \text{if and only if} \quad f(v) = g(w)

However, this relation has some structure on it: if v_1 \mathrel{R_{A}} w_1 and v_2 \mathrel{R_{A}} w_2, then (a_1 v_1 + a_2 v_2) \mathrel{R_{A}} (a_1 w_1 + a_2 w_2). This means that \mathrel{R_{A}} is a linear relation.

What does composition mean for two relations presented in this way? Well, we can derive it. Recall the cospan composition diagram:

Now, by definition of A \sqcup_Y B, j_A(i_A(x)) = j_B(o_B(z)) if and only if there exists some y \in Y such that o_A(y) = i_A(x) and i_B(y) = o_B(z). Therefore, x \mathrel{R_{A \sqcup_Y B}} z if and only if there exists some y such that x \mathrel{R_{A}} y and y \mathrel{R_{B}} z.

Why do we care about linear relations? Well, one reason is that steady-state solutions to certain circuit diagrams can be modelled by vectors of voltages. Therefore, a circuit gives a relation between voltages of its input and voltages of its output. Moreover, this relation is in fact linear! We can add together two solutions of the circuit laws, and we get another solution!

In light of this, the law for composition of relations makes a lot of sense: x is compatible with z if and only if there is some intermediate y that is compatible with both!

Problems

I’m going to wrap up this post now, because I want to keep it around this length, but I’m going to tell you some things that should nag at you and make you worry and then make you anxious to read my next post.

First of all, I never gave a full description of electronic circuits. The problem here is that we want the “feet” to be a particular kind of simple circuit, namely a collection of single nodes (think about a USB cable: there are just 4 nodes that you connect with another set of 4 nodes). However, the apex of the cospan might be very complicated. We need a way of addressing this.

Also, we would like to provide semantics for electronic circuits: we want a description of how electricity flows through them in such a way that we can take the description for an overdrive pedal, and take the description for a distortion pedal and get a description of the combined effects of overdrive and distortion. We started to get at that with the category of linear relations, but we want to also consider dynamics.

Finally, we want to also be able to compose circuits not just in series but also in parallel. Spoiler alert: this involves string diagrams.

For the answers to these questions and more, tune in in a week or two!